Authors: Priyasha Purkayastha, Global Content Manager, TJC Group | Laura Parri Royo, Marketing Director, TJC Group

Technical Experts/Co-authors: TJC Group Archiving Council

The impact of digitalisation in today’s era is far beyond what you and I can comprehend. If statistics from the Yale-led study are to be stated, just during the first few months of the COVID-19 pandemic, internet usage across the world increased by 40%, with an additional electricity usage of 42.6 million megawatt-hours. Now multiply this with the massive amounts of hours businesses put in and the humungous data generated across several industries. To store these data, organisations require storage spaces, and to create these storage spaces – cloud, on-premises, or hybrid, the production never stops. It is a never-ending cycle, leading to digital carbon emissions, e-waste, and expensive storage costs. While data generation cannot be stopped (they will only increase in the forthcoming years), SAP Data Archiving is one of the most sought-after ways to curb these challenges.

Table of contents

- 1. Introduction to SAP data archiving

- 2. Overview of SAP data archiving in data management

- 3. Does SAP data archiving differ in SAP S/4HANA and SAP ECC/NetWeaver?

- 4. Data archiving in SAP S/4HANA

- 5. Data Archiving in preparation for s/4HANA migration

- 5.1 Technical considerations before migrating to S/4HANA

- 5.2 Data Volume Management recommendations for SAP S/4HANA migration

- 5.3 The approaches for S/4HANA migration process

- 5.3 Advantages of each scenario

- 5.4 Tools for each migration scenario

- 5.5 How does data archiving fit into each migration approach?

- 5.6 Implementation Customer/Vendor Integration (CVI)

- 5.7 Migrating data archiving processes from SAP Legacy systems to SAP S/4HANA

- 6. Strategies to optimise data volumes in SAP S/HANA

- 7. Legal compliance, data privacy and data archiving

- 8. Automating data archiving and data deletion with the Archiving Sessions Cockpit

- 8.1 The need for automated SAP data archiving solutions

- 8.2 Archiving Sessions Cockpit | Your go-to software for SAP data archiving

- 8.3 The development of the Archiving Sessions Cockpit

- 8.4 Functions of the Archiving Sessions Cockpit

- 8.5 The Archiving Sessions Cockpit for data deletion

- 8.6 Advantages of the Archiving Sessions Cockpit

- 8.7 Archiving Sessions Cockpit versus Jobs Scheduler

- 9. Strategy and Planning

- 10. Reduction of carbon footprint with SAP data archiving

- 11. Best practices to follow for SAP data archiving

- 12. How can TJC Group help with data archiving in SAP systems

1. Introduction to SAP data archiving

1.1 What is data archiving?

Archiving can have several definitions, but at its core, data archiving is defined as a strategic approach that helps organisations manage data growth. Along with this, archiving also optimises system performance and ensures the efficient use of database resources within the IT environments. Furthermore, data archiving strikes a balance between retaining the necessary historical data and maintaining a lean, high-performing IT infrastructure.

SAP Data Archiving is a secure process that enables long-term data storage and retention. In fact, the process provides organisations a secured location to store critical information to be used when necessary (like during audits, etc). What’s more interesting about archiving is that after the data is archived in the system, the information remains accessible to authorised personnel while its integrity is protected. In the SAP world, the technology used for archiving data is the Archive Development Kit (ADK) along with the Archive Administration (transaction SARA). However, in the non-SAP world, different technologies exist, such as intermediate archive implementations.

Let’s understand this with an example –

Consider that you have data of 1000 of your clients in your main system. Out of these 1000, only 300 are of utmost importance – the remaining 700 are not needed as of now (making them historical information). However, the unused and excessive data hampers your system’s performance and decreases your storage capacity. All these together, unfortunately, increase your operational and overall costs. What will you do then? Delete their details? – Not a smart option, as the data may be required later for audit purposes. Leave the data as it is and hamper your IT landscape? Not a good option either. The smartest thing to do is moving the unused data into a separate system for long-term retention. This is precisely what data archiving does!

Data archiving has a plethora of benefits for an organisation – system performance and reduction in costs are just the top layers. Keep reading our detailed blog to learn extensively about archiving.

1.2 Types of archiving

Not more than often, many consider data archiving and document archiving to be the same thing. However, both are strikingly different from one another. Additionally, there’s fiscal archiving as well. Here’s what you need to know about their differences –

Data archiving for structured data

Like we have said before, data archiving is just moving your closed business transactions data from the live system to an offline or secondary storage. The key aspect of data archiving is that you need to set a process and have a strategy to reduce manual efforts and errors. Additionally, you must ensure compliance with legal data retention requirements.

Document archiving for unstructured data

The simple difference between data and document archiving is the type of data that you are archiving. Document archiving relates to unstructured data, like sales invoices, pdf, word files, and so on. This type of archiving occurs in real-time; moreover, it can be stored on any content server. However, ensure to keep the link active from the content server to your SAP systems for accessing the archived documents.

Fiscal archiving

An archiving that’s not so spoken of – fiscal archiving is the process of regularly freezing your data. By freezing, it means that the state of your data is kept at a specified time by archiving them. Why is it done? Interestingly, fiscal archiving ensures the quality of your audit report based on the consistency of your original data.

1.3 Types of archiving objects

When embarking on a data archiving project in SAP, the first thing to look at are the archiving objects. As defined by SAP, “an archiving object specifies precisely which data is archived and how. It describes which database objects must be handled together as a single business object.”

When ILM is activated in the system, a lot of archiving objects may be used in two ways:

- Data archiving: Archived data remain available.

- Data destruction: Archived data are destroyed.

In both cases, data are processed in two steps:

- Data is read from the database and written in archive files.

- Data is read from archive files and deleted in the database.

There are two main differences between archiving and destruction processes:

- With archiving, archive files remain available and archived data can be accessed and read. With destruction, archive files are always deleted at the end of the process.

- Except for a few objects, data is not protected by a residence time when archiving. With data destruction, a retention period is always mandatory. For example, you can archive an IDoc created yesterday if it is complete. But before running data destruction on this IDoc, you must first define a retention period in ILM customising.

A common point between the two processes is that only complete business objects are selected for archiving and destruction, i.e. an accounting document with non-cleared items will never be selected for archiving and destruction.

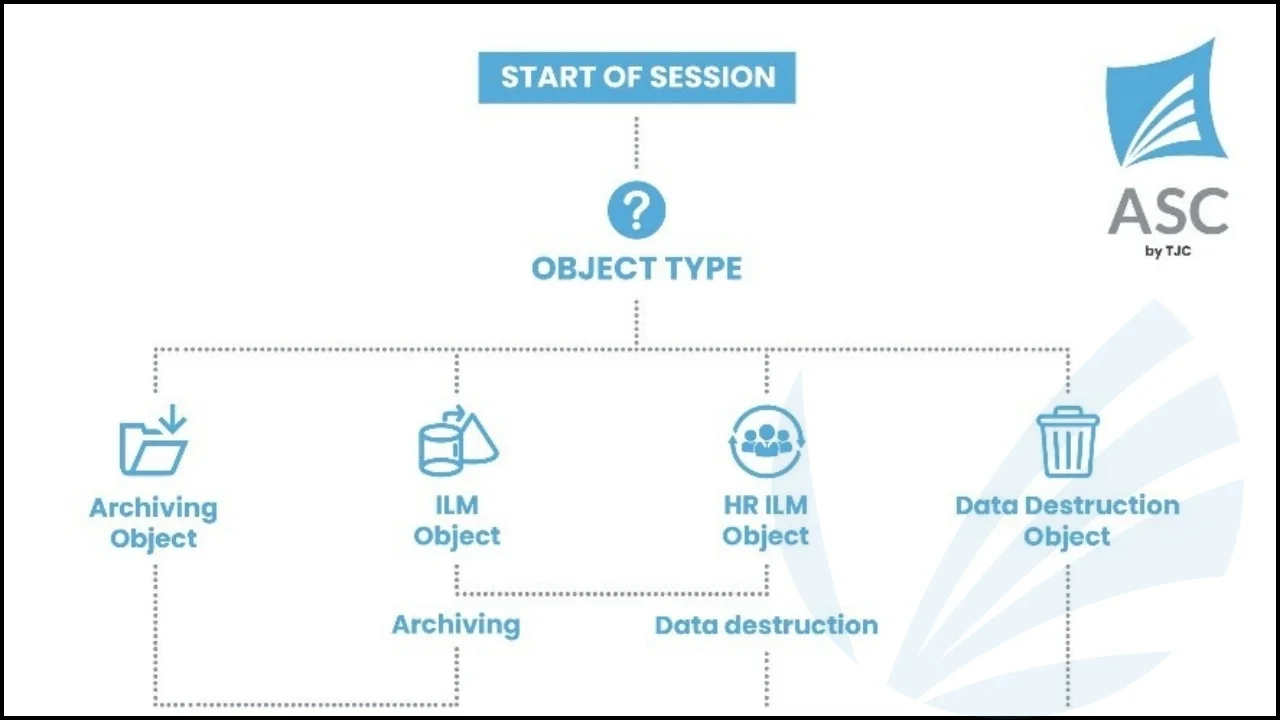

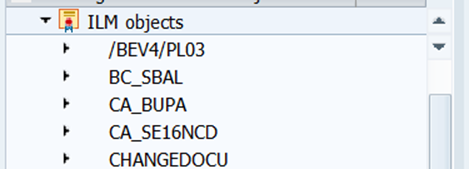

When ILM is activated, we can consider 3 types of archiving objects:

- Archiving object: it can only archive data.

- ILM object: this object can archive and/or destroy data.

- HR ILM object: this object can only run data destruction.

These three object types use archiving techniques. Whether an object can perform both archiving and destruction or only archiving or destruction is defined by SAP and may change over time.

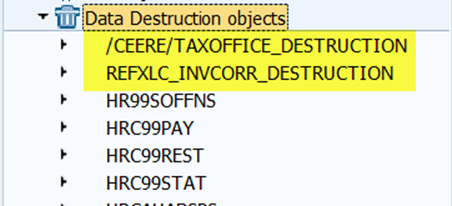

Note that a fourth object type (data destruction object) can be used when ILM is activated.

This object processes data for which an archiving object does not exist and does not use archive files but directly deletes data from the database in a single step, like a classical housekeeping process. Unlike housekeeping jobs, the object requires an ILM customizing, and a trace of data destruction runs is kept in the system.

1.4 Differences between data archiving and data destruction

Data archiving requirements

Data archiving is the only way to reduce the SAP database size and keep database growth at the minimum possible level. It also helps reduce costly memory growth when using in-memory databases such as HANA. It is essential to archive data as an ongoing process to achieve both results. For that, it is necessary to complete several actions, such as variant creation, job scheduling, run follow-up, and corrections.

Data destruction requirements

Data destruction using SAP ILM is the standard method provided by SAP to destroy data to comply with data privacy regulations. It is also the means of consistently and securely deleting old data that should no longer exist in the system in accordance with the company’s policies. Unlike data archiving, data destruction is mainly used to destroy data for legal requirements and, therefore, must be processed regularly.

Please note data destruction is required and hence mandatory to comply with data privacy laws, such as GDPR. That is, it is mandatory to delete certain data to be compliant, regardless of the company size or database size.

Finally, it is worth pointing out the difference between data retention policies and legal hold. Data retention policies establish how and when data or documents must be destroyed. On the contrary, legacy holds a pause on the destruction step to preserve the relevant data and documents for legal purposes.

2. Overview of SAP data archiving in data management

2.1 Why should you archive data in SAP systems?

Stakeholders and decision-makers often face a dilemma, thinking about whether data archiving in their SAP systems is a best practice or not. However, there are many reasons that will justify, or rather support this argument, like –

- Data privacy

- Overall cost reduction in operations

- Reduced total cost of SAP ownership

- Improved system performance

- Stay on par with your sustainability targets

These benefits do not just apply to the Production environment, but also to non-Production systems that need to be regularly refreshed from Production, for Project and Support purposes.

We often hear people say that archiving is just a task on the periphery; there are more important projects. But have you wondered what magic archiving can do to ensure that those projects sail smoothly? As a matter of fact, there are several other reasons why organisations should undertake archiving processes. Let’s look at them –

Leverage a shiny and streamlined HANA database

One of the core reasons for choosing to archive data in your SAP systems is to leverage a streamlined HANA database. SAP data archiving helps mitigate memory and performance issues created by vast amounts of transaction data. Therefore, this enables consistent system response times, making the entire database smoother, more responsive, and more efficient for users.

Control your database growth

Let’s face it – your database isn’t getting any smaller with the amount of data generated daily. The more the data grows, the more storage you will require. In fact, to get this expanding storage, you must spend quite a lot of money. So, if you don’t want to spend excessive money on storage while wanting to control your database growth, rest assured – SAP data archiving is the best practice.

Keep your compliance in check

Another major reason for opting to archive your data in SAP systems is to keep your compliance in check. With archiving, you can ensure meeting legal requirements, without hindering your business operations. By archiving the data, you keep it alive, without having to delete any important audit-relevant information. Therefore, meeting the legal and compliance requirements and freeing yourself of any penalties.

Read: https://www.tjc-group.com/resource/carlsberg-sap-data-archiving-and-gdpr-compliance-case-study/

2.2 Benefits of data archiving in SAP systems

Now that you have learnt about the reasons for archiving data in SAP systems, let’s talk about the numerous and significant benefits of this process –

Cost reduction: Put a lid on data volume growth and reduce the cost associated with storing large volumes of unnecessary data.

Data privacy: Data archiving helps ensure compliance data privacy laws or tax and audit requirements by retaining or deleting personal information in a tax archive format

Make the right-size move to S/4HANA: Archive old data, such as old company codes, that do not need to be migrated into the new system but must be retained for compliance.

Reduce migration times: Typically, the time window to conduct a migration to a new server or cloud are very short. The more data, the longer the migration times.

Improve SAP system performance: A lighter system will result in quicker backup times and a better use of IT resources.

Lower the carbon footprint: Less data means less energy is used to store it, contributing to the organisation’s sustainability goals.

3. Does SAP data archiving differ in SAP S/4HANA and SAP ECC/NetWeaver?

Before talking about the differences in the data archiving process in S/4HANA and ECC, let’s see in brief about them –

3.1 Overview of SAP ECC

Built on the SAP NetWeaver platform, the SAP ECC is a robust Enterprise Resource Planning (ERP) system used by organisations to deploy on-premises as per the business needs. The NetWeaver platform is an integration and application platform that strengthens the foundation for all the classic SAP software solutions. It offers several technologies and tools, further enabling the solutions to run on various hardware and software platforms. In fact, its ability to offer such a wide range of technologies makes the NetWeaver platform more seamless to use and integrate.

Functionalities of SAP ECC in a quick glance

One of the foremost functionalities of SAP ECC/NetWeaver is that it offers a unified, real-time vision of business data. Furthermore, it provides an in-depth insight to organisations, helping them make more informed decisions. The system comes built with analytics and reporting tools, thereby driving continuous enhancements and better results in businesses. Apart from this, SAP ECC offers a deep pool of features that allows organisations to manage several verticals seamless, starting from financials, logistics, to human resources, supply chain management, and more.

3.2 Overview of SAP S/4HANA

While SAP ECC/NetWeaver happens to be a robust platform, the world is evolving with several technological advancements. The SAP S/4HANA happens to be a classic example of this advance. Originally released as a next-gen financial solution in 2014 under the name SAP Simple Finance, S/4HANA was later expanded to become a full-scale ERP system in 2015. Interestingly, the next-gen ERP is embedded directly with improvements like extended warehouse management (EWM) and production/detailed scheduling (PP-DS). However, with the upgrades in 2018, SAP added predictive accounting functionality to S/4HANA, along with the embedding of additional intelligent technology upgrades. Cut to 2021, SAP released multiple industry-specific offerings of S/4HANA across the energy and natural resources, financial services, service and consumer industries, discrete industries, and public services. Since then, a significant effort has been made to include generative AI features.

Functionalities of S/4HANA in a quick glance

With continuous evolutions, the SAP S/4HANA comes with features like the Business Partner Approach, Customer Supplier Integration (CVI), and so on. The next-gen ERP also comes integrated with SAP Fiori 3.0 with UI5 as its enabler. The overall performance of the system is responsive and highly intuitive. Apart from this, there are expectations that natural language interaction and machine intelligence will become a key part of the Fiori experience in the future.

3.3 SAP data archiving: SAP S/4HANA vs SAP ECC

Now that we have discussed SAP ECC/NetWeaver and SAP S/4HANA in brief, let’s address the bigger question – is the data archiving process in these systems different? As a matter of fact, they are indeed different. While the outer picture may seem like they have the same process, the in-depth one says a different story; here’s why –

The database of the ERP systems

The foremost difference in data archiving between S/4HANA and ECC contributes to their databases. While SAP ECC runs on several databases like DB2, Oracle, SQL Server, and SAP MaxDB, S/4HANA runs on the HANA memory database.

The deployment options of the systems

Next comes the deployment options of the SAP systems. SAP ECC’s deployment option is on-premises. However, S/4HANA has multiple deployment options like on-premises, on a hosted cloud or a public cloud, and in a hybrid, model combining both on-premises and cloud. SAP favours the RISE implementation.

Architecture of the systems

Another factor that contributes to the difference in SAP data archiving for S/4HANA and ECC is the architecture of the systems. While SAP ECC’s architecture is modular, it still has a traditional/conventional approach. On the other hand, S/4HANA has a more streamlined, next-gen architecture that supports powerful and seamless development and business operations. This materialises with SAP BTP usage.

4. Data archiving in SAP S/4HANA

4.1 Database tables, business and archiving objects for S/4HANA

For SAP S/4HANA, the concept of data archiving comes from the archiving objects of the Archive Development Kit (ADK). As a matter of fact, archiving objects have at least one archiving program and one deletion program; database tables that belong to the same business object perspective (like accounting documents or sales orders) are combined under one of the archiving objects. Usually, these database tables are treated as separate units, but when tables are used by several objects, then they are accessible through the archiving classes. Archiving classes used throughout SAP S/4HANA are for texts, changing documents, and so on. Overall, each archiving object has a reading program that helps search and display the contents of the archived files. Additionally, there are tables marked for deletion that comprise redundant data, which are no longer required.

4.2 Benefits of data archiving for the S/4HANA journey

Here are some of the most sought-after benefits of SAP data archiving for the S/4HANA migration journey –

The HANA database cost

What many corporations face a challenge with is the cost of the HANA memory. Scalability comes at a cost it strikes the organisations that they have to tackle the associated cost of the database size. Adding to this, there are also the ongoing infrastructure costs that corporations have to consider, even when paying it through a RISE program. All these coupled together can put a lot of financial burdens on the organisations.

Now, as your organisation grows, the requirement for HANA memory will also grow with it, consequently raising the need for hardware like disks, processors, and so on. All in all, it may hinder the S/4HANA migration journey, which is why opting for data archiving is a clever strategy. In fact, as mentioned before, data archiving is the way to go if organisations want to control their database growth and enjoy a more streamlined DB.

Having a rightsized database will help reduce costs and make future evolutions, version update, or even the migration process faster. It is far easier to do this before in an ongoing way than afterwards to prevent unnecessary expenditure. Once you have moved to S/4HANA, it is important that you keep your data growth under control to prevent spiralling costs. Databases that are not actively managed typically grow by 10 – 15% per year, and even more in the initial years.

The storage costs

Well, yes, if there are database costs, there are storage costs as well. And what’s the catch here? – use a decent server to archive your data. It is a simple logic – a decent server for data archiving helps ensure an astounding data compression ratio; this ratio further helps in reducing storage costs. In fact, this is quintessential when corporations are migration from on-premises to S/4HANA cloud.

Seamless data migration

Last but not least, the more the amount of data, the more complexities organisations are set to face. Not to forget its adverse impact on the system performance (even with S/4HANA, and this is often a surprise when a corporation faces ACDOCA performance issues for the first time). Hence, during the S/4HANA migration journey, moving to a new system with pertinent and manageable data is a good practice – data archiving, as well as SAP lean SDT, helps you achieve just the same. As you know, the data archiving process involves dividing databases into two sections – one with relevant data to be archived and the other with data to be deleted. Therefore, this ensures that only important data are easily accessible and migrated to the new system while being more seamless to manage. Archiving also allows moving older data from the SAP archive format to a third-party intermediate archive application also helps. Overall, archiving and/or SDT not only prep your data but also help deliver an effortless migration.

5. Data Archiving in preparation for s/4HANA migration

Getting your data in shape prior to the move is essential. Having the right-sized database will help reduce costs and make the migration process faster. It is far easier to do this before the migration than afterwards to prevent unnecessary expenditure.

5.1 Technical considerations before migrating to S/4HANA

Properly prepping your data before making the move to S/4HANA is paramount for a successful migration. These are essential considerations to be made:

- Cloud and hardware planning and data volume management go hand in hand. Plan your cloud or hardware needs (particularly memory and disk requirements) based on your data volume needs.

- Check annual data growth rates. It is best practice to examine the size of your SAP database and measure how much it has increased every year. SAP provides a standard DVM report to review data growth for various selected objects (e.g. tables) in SAP systems.

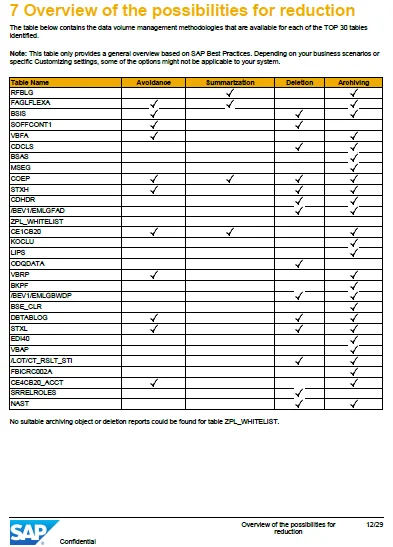

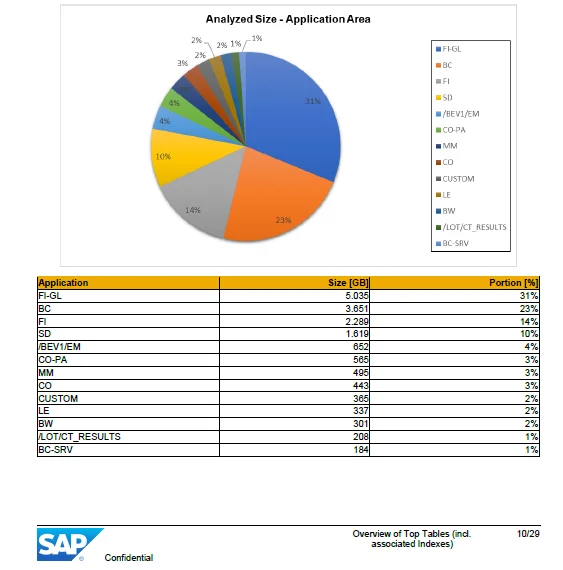

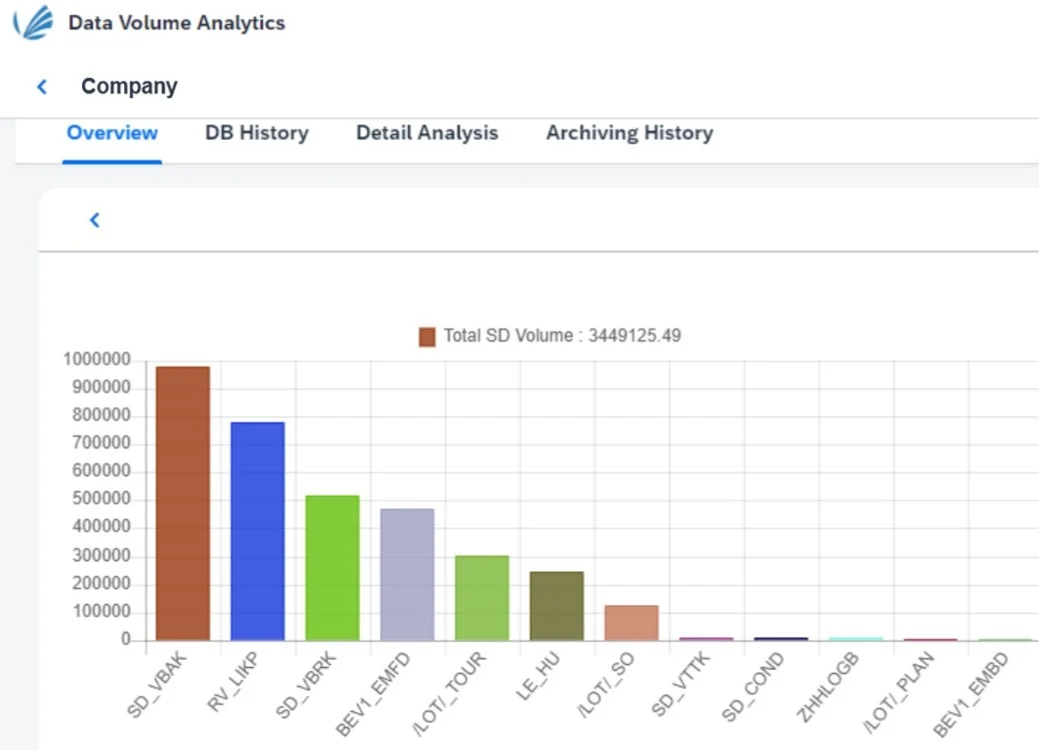

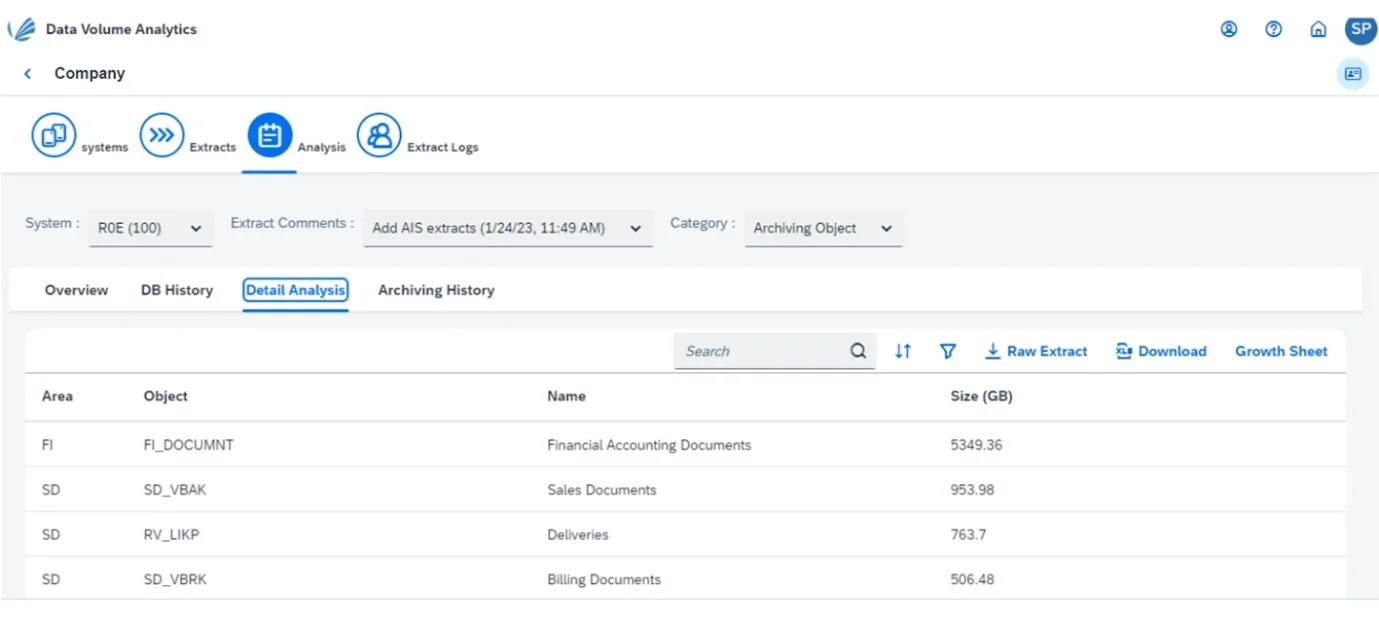

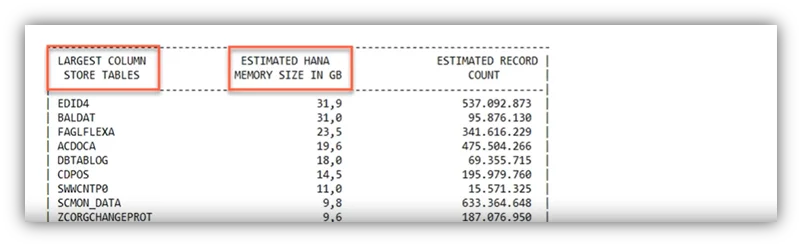

- Below is a sample image from the SAP DVM report: The following table shows tables with various options on DVM like avoidance, summarization, deletion & archiving. Clearly, avoidance & summarisation are rarely implemented because of the implications of business impact. So only the deletion & archiving are most commonly used DVM methods.

Below pie chart, you can identify the application(s) that cause the largest volume of data in your system, however the detailed analysis of archive objects to implement with savings projection is missing.

- Although the DVM report can be complicated to implement and does not cover all the tables found within an SAP system, it is a good starting point. TJC Group has developed a special DVM analyser that overcomes the limitations and gaps within SAP’s tool. It provides accurate figures about data volume growths for tables as well as archiving objects. To review data growth for various selected objects (e.g. tables) in SAP systems.

- Expect data growth to be affected by business growth. Let’s simply consider a company opens a new branch in a new company. New company codes will be generated, impacting data growth. Typically, mergers and acquisitions will also have an impact on data growth.

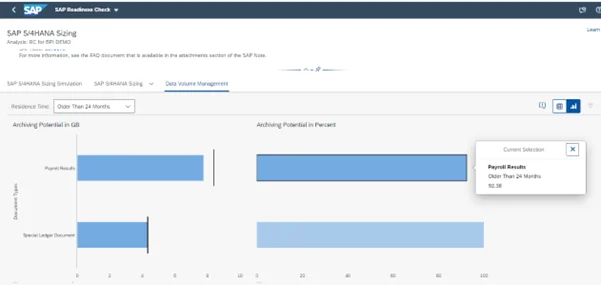

- Run the SAP Readiness Check. New system functionalities will increase data growth. System integrations, new software functionality and custom development to your SAP system will all increase data volumes, it’s unavoidable. We recommend running the SAP Readiness Check, a scan that will produce reports on many items. Always check the Simplification Items before migration to S/4HANA and focus on data volume reduction or data cleansing as a starting point.

Ref: SAP

- Data volume reduction and/or Landscape Transformation. The next step is to actually reduce data volumes. But how? Technical Data Archiving and Data Deletion are the two most efficient ways to achieve maximum volume reduction in existing SAP systems. Even if your organisation hasn’t made a firm decision to migrate to S/4HANA, data volume management will still produce significant cost and efficiency benefits, freeing up IT resources to focus on greater value-adding initiatives.

5.2 Data Volume Management recommendations for SAP S/4HANA migration

Ensure to migrate only the required data

Don’t carry forward the baggage you have had for years. S/4HANA migration can be seen as a transformation opportunity, too. Simplify the data model with a leaner architecture and a new user experience using FIORI UX. Migrating only the relevant data will achieve the following:

- Reduce hardware and license costs

- Lower migration effort and costs

- Shorter conversion runtimes

- Reduce risks associated with bad data during the migration process

- Right size your initial investment in your HANA Appliance

- Control and Manage OPEX by managing the growth of data

Something to keep in mind is the cost associated with the size of your database, not only in terms of moving the data, but also the ongoing infrastructure costs after the migration to S/4HANA. Ensure only the right data is migrated to avoid dispensable costs and continue to archive data once S/4HANA is in use to manage Operational Expenditure by controlling Volume of Data in HANA memory, and thus neither requiring additional Hardware or Licensing costs based on SAP pricing Model.

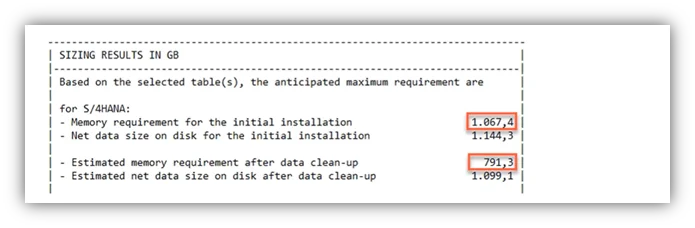

Estimate HANA memory needs

Start by running SAP Sizing report that will provide first idea of the expected in-memory need and disk requirements. The report will also highlight the largest tables.

In the size report, the calculations are made based on the assumption that the residence time is 14 days. However, please note that this is not a realistic scenario in most cases.

Note that Data aging is considered as one option for data reduction in the Sizing Report. Data Aging offers the option to move large amounts of data stored within the SAP HANA database away from HANA’s main memory. Data Aging differentiates between operationally relevant data (Hot/Current), and data that is no longer accessed during normal operation (Cold/Historical).

However, data aging must be looked at with care for several reasons:

- In the size report, the calculations are made based on the assumption the residence time is 14 days. Note this is not a realistic scenario in most cases.

- Data Aging is irreversible and no longer recommended by SAP (note 2869647). Caution is advised as there is no way back.

- Data aging is a temporary fix because data will be loaded back into memory.

The sizing reports point to Data Archiving and Native Storage Extension as suitable alternatives to Data Aging in SAP S/4HANA.

Once you’re on SAP S/4HANA, bear in mind HANA memory is expensive and scalability is limited. The HANA pricing model follows a T-Shirt structure shown in the above table.

Based on the estimated memory needs, a size is to be chosen, ranging from XXS to XXL. Moving up to the next tier can be a large jump in memory size, whereby the majority of memory will remain idle and result in unnecessary costs. Therefore, it is mostly advised to first and foremost reduce the memory needs as much as possible, and secondly, estimate memory needs accurately.

To know more about HANA memory in this blog article: HANA Database: How to keep data growth under control | SAP Data Management

Run a data archiving program

Archive as much data as possible before S/4HANA migration and if possible, automate the process for additional gains. This initiative will make a major contribution to reducing the cost of running SAP S/4HANA estate and, with data volumes finally under control, will also postpone the likely timescale for provisioning additional HANA Memory.

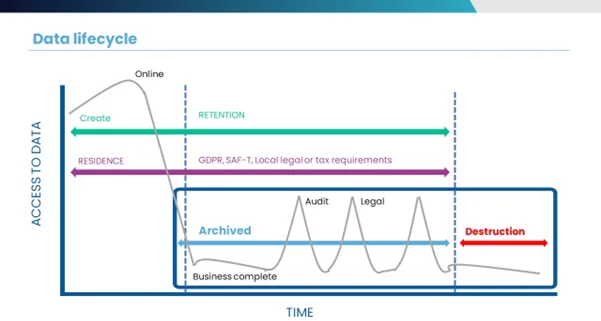

Without ongoing action, any ERP will continue to grow at an unchecked rate, resulting in slow performance and requiring additional storage. S/4HANA is a premium storage environment. It is very powerful and delivers exceptional performance, but this comes at a high cost. If data volume growth is left unchecked, significant and unnecessary costs will be driven into the business. As seen before, data Archiving in SAP systems consists of extracting rarely accessed data from the database, writing it into archiving files and deleting the data from the database. The archived data is stored in a file and moved to a more cost-efficient and long-term storage system via the ArchiveLink CMIS or the ILM interface. Archive data remains available in read-only status and reduces memory needs. Data Archiving also covers the full data lifecycle, as the information can be deleted when it is not needed anymore.

“Everybody talks about the challenge of data management during the journey to S/4, and they are right. Above all ensure that you’ve got the right governance and done the relevant data cleansing. And if you think you’ve done enough, you probably haven’t. Spend as much time on the data management and start early, investing in automation wherever possible”.

Mark Holmes Principal Enterprise Architect at British Telecom.

Choose the migration approach

Whether you are starting from scratch i.e. doing a greenfield migration, deciding on a brownfield approach, or plan using SAP lean Selective Data Transition approach, we can support all scenarios. Understanding the impact of your database size, use of your future data and overall system landscape is essential.

Set up a post-migration strategy

Once you have moved to S/4HANA, it is important that you keep your data growth under control to prevent spiralling costs. Databases that are not actively managed typically grow by 10 – 15% per year. Ongoing automated data archiving will ensure the database growth stays under control. HANA memory comes at a high cost and scalability is small, therefore it is crucial to manage the database growth. You’ll want adopt strategies to decrease the memory growth to avoid jumping into the next T-shirt size. This blog article gives some recommendations to keep HANA memory growth under control.

Decommission the SAP legacy system

TJC Group offers a solution to decommission your old legacy systems, reducing business risks related to non-compliance and minimizing S/4HANA migration costs. Legal regulations and GDPR data privacy requirements are frequently not enforced on old systems. Furthermore, IT costs and administration of maintaining old systems are a drain on resources. Therefore, you may consider ELSA by TJC Group as one of the best solutions, built on the SAP BTP platform and designed with robust security protocols.

5.3 The approaches for S/4HANA migration process

Initially, there were only two migration options in opposed directions: a brand-new implementation (greenfield) or a system conversion brownfield) approach. Later on, the hybrid approach Selective Data Transition emerged as a suitable option. Each scenario has its pros and cons. Let’s analyse the main migration scenarios:

The Brownfield Approach

The Brownfield Approach, also called System Conversion, is where the company decides to migrate the existing business processes into SAP S/4HANA. It’s similar to a technical upgrade. Users choose this migration option if they wish to keep all their existing data and re-use existing business processes. As we have explained already, this option can prove very expensive due to the hosting costs involved.

The Greenfield Approach

The Greenfield Approach, also called New Implementation, is where the company decides to start from scratch and implement a brand new, consolidated SAP S/4HANA system. It’s a blank canvas approach that provides scope to completely re-define business processes. Historic data cannot be migrated into a New Implementation and the migration process is managed through SAP Migration cockpit.

The Bluefield approach

The Bluefield approach, also called SAP lean Selective Data Transition is used in cases where customers require more flexibility to re-define certain processes but keep others and to retain some data but archive off what’s not required. This route is chosen by companies that wish to migrate a selection of data across multiple organisational units. It’s a good option for companies that have more than one ERP system and want to consolidate them into a single SAP S/4HANA and load historic data (closed business data) or data from mergers and acquisitions.

Note: there are additional smaller scenarios that will not be covered in this guide such as: migration projects linked with architecture simplification (many-to-one), migrations including Landscape Transformation (such as company code deletion, company merge, and many others business cases), or data privacy implementation.

5.3 Advantages of each scenario

What are the advantages of an SAP Greenfield implementation?

A greenfield implementation enables a fresh start meaning that you won’t be limited by the constraints imposed by your existing SAP system. Wipe the slate clean and design the systems to best serve your needs. It is a catalyst for immediate innovation and transformation.

What are the advantages of an SAP Brownfield implementation?

The brownfield approach makes practical sense for companies looking to preserve their existing business processes, in a similar way to how they were in SAP ECC or previous versions. The migration process is more automated than with a Greenfield implementation. This approach is usually faster and comes at a lower cost initially. Process transformation, if any, occurs within separate initiatives after go-live.

What are the advantages of a Lean SDT?

With the selective data transition approach, a company can enjoy the best of both worlds: establishing new business processes based on a new configuration set, all while extracting, transforming, and loading historical data subsets to keep important data. Organizations can, afterwards, alter or enrich the data to conform to the new configuration, business processes and reporting needs.

5.4 Tools for each migration scenario

Generic tools

The SAP Readiness Check for SAP S/4HANA performs functional and technical assessments for SAP ERP systems prior to a planned conversion to S/4HANA. The tool’s main focus is on the functional assessment that evaluates all available SAP S/4HANA simplification items, identifies the ones that are relevant for your system, and ranks the amount of effort you might expect for implementation.

The SAP Readiness Check can be a good starting point to understand the extent of data volume growth before system migration, but it does not provide detailed information about which archive objects can be chosen with best practice retention time.

TJC Group has also developed an internal tool to manage the database growth and facilitate data management in the migration to SAP S/4HANA. The Database Volume Analyser identifies Archive Objects with total volumes, it separates the deletion and housekeeping tables and displays the information in growth charts.

Tools for the Brownfield approach

The main SAP tools for the brownfield approach are the following:

Maintenance Planner, in conjunction with Support Package Manager/Support Add-on Installation Tool: The cloud-based Maintenance Planner is available through SAP Solution Manager. It provides a centralized tool for setting up updates, upgrades and new installations within the SAP system environment.

Software Update Manager (SUM):

The SUM tool completes the following tasks as part of a one-step conversion:

- Optional database migration (if the source system is not yet running on the S/4HANA database, use the SUM’s Database Migration Option to migrate the database to S/4HANA during the conversion)

- Installation of the S/4HANA software

- Conversion of your data into the new data structure used by S/4HANA (this is the automated part of the data migration)- 1) Customer Vendor Integration Cockpit 2) Use the Obsolete Data Handling tool to delete obsolete data that remains after the conversion of your SAP ERP system to S/4HANA.

Tools for the Selection Data Transition approach

Multiple tools are necessary for executing a selective data transition. Both standard SAP tools and/or third-party data tools are available. The SAP Business Transformation Centre is SAP standard offering to manage lean SDT migrations included in SAP standard support licence. Launched in September 2023 by SAP, the SAP Business Transformation Centre (SAP BTC) is a new migration tool that facilitates S/4HANA migration in the Lean Selective Data Transition (SDT) scenario. It offers an end-to-end, a guided, end-to-end process for both data migration and transformation.

The SAP Business Transformation Centre heavily supports the creation of a Digital Blueprint to kickstart SAP S/4HANA migration project in Lean SDT migrations.

SAP BTC is powered by SAP Cloud Application Lifecycle Management (ALM) is a SaaS cloud-solution offering which is based on components of SAP BTP. One of the main features of SAP BTC is to heavily support the development of the digital blueprint for migration with data-driven insights and guided project scoping including a blueprint documentation”. SAP BTC provides a digital blueprint for:

- Data insights

- Visualisation

- Recommendations

Tools for the Greenfield approach

The main SAP tools for the brownfield approach is the SAP S4/HANA Migration Cockpit. This tool is SAP’s recommended pathway for the migration of business data to SAP S/4HANA and SAP S/4HANA Cloud. The migration cockpit is part of SAP S/4HANA and SAP S/4HANA Cloud and it is included in these licenses.

Some advantages of the migration cockpit are:

- Migrates data from SAP systems and non-SAP systems to SAP S/4HANA and SAP S/4HANA Cloud

- Provides a comprehensive migration solution with no programming required by the customer

- Includes mapped data structures between source and target systems

- Reduces test effort

- The migration programs are automatically generated no programming required by the customer

- Standard APIs are used to post the data to SAP S/4HANA system

5.5 How does data archiving fit into each migration approach?

Without having a data volume management programme in place to streamline the implementation phase, migrating to SAP S/4HANA® can become prohibitively expensive. By introducing an effective data volume management strategy, organisations can reduce the scope, complexity, duration, and long-term cost of ownership of their SAP S/4HANA® migration. This is achieved by providing savings on future hardware requirements, HANA memory needs and SAP S/4HANA® licensing costs.

After migration to SAP S/4HANA® is complete, further savings can be achieved by decommissioning the SAP and non-SAP applications left behind post-migration. This will further simplify the overall IT landscape and reduce long-term administration and maintenance costs.

Let’s cover in detail what options are best recommended for each migration approach to optimise the Data Management strategy before and after the migration.

Data Archiving for Brownfield approach

In System Conversion (Brownfield) projects, any risks associated with the migration process can be mitigated by reassessing the quality of the master data (especially customer and vendor data) and by archiving dispensable transactional data prior to commencing the project. This helps by reducing the migration ‘go live’ window.

There are multiple benefits to be gained from data archiving in Brownfield projects, the most important of these are as follows:

- Archiving reduces the database size, which reduces the volume of HANA memory needed. Less memory means significant cost savings.

- Archiving reduces the migration timeline because less data is migrated to S4/HANA. Reduce the time needed to complete this phase reduces the disruption to business operations and the amount of resource required for the project.

The decommissioning of legacy systems in a Brownfield approach can also provide further benefits. In SAP S/4HANA, there are some former tables and some master data that no longer exist in the new system, even in a brownfield approach. Also, bear in mind some of the data will go through a transformation, yet SAP does not provide a change history. If one day an auditor asked for the traceability of taxes from the origin of the system, the company would not be able to provide that unless the SAP legacy system has been fully decommissioned and access to legacy data is enabled.

Data Archiving for a Lean SDT (Bluefield)

Selective Data Transition (Bluefield) migration projects are the most innovative with SAP lean Selective Data Destruction based on SAP Cloud ALM solution. As mentioned previously in section 5. 4.3” Tools for the Selection Data Transition approach”, the SAP Business Transformation Centre is SAP standard offering (and included in SAP standard license) to manage a Lean SDT migration.

Data archiving offers advantages for SAP customers in both scenarios:

- Archive and delete data before the migration phase may help for large systems.

- Automated archiving may allow for reduced systems to be used.

- TJC ELSA platform offers multiple scenarios for either SAP ECC archive access and/or SAP S/4HANA archive access, including mixing ECC & S/4HANA views.

- Decommission SAP legacy systems post migration, to ensure legacy data can be accessed at all times.

Data Archiving for Greenfield approach

New Implementation (Greenfield) migrations offer limited scope for data archiving and data can be selectively migrated to S/4HANA. Only open data can be migrated across using the SAP Migration Cockpit.

In this scenario legacy SAP systems are connected to the SAP Migration Cockpit. This handles the process of pulling across data into S/4HANA. As the SAP migration cockpit does not allow archived or closed data to be migrated into HANA, data archiving is mostly helpful in closing open items (and an automated process may be a blessing here).

Post migration, there is scope for legacy system decommissioning as the historic data must be retained for legal, tax or audit purposes. With the support of ELSA (Enterprise Legacy System Application), it is possible to decommission old SAP (and also non-SAP) systems and enable access to legacy data at all times from SAP S/4HANA or through ELSA app.

5.6 Implementation Customer/Vendor Integration (CVI)

The Customer/Vendor Integration (CVI) is the first step before master data can be migrated to SAP S/4HANA, but not all customers / vendors are to be migrated if they are no longer relevant. Therefore, it is best practice to archive such data before the CVI is performed. This will further help making CVI process smooth. In order to use the SAP Business Partner object within S/4HANA, the Customer/Vendor Integration (CVI) must be used.

In SAP ECC, three tables corresponding to Customers, Vendors and Business Partners exist, in contrast only one table exists in S/4HANA®. The CVI is a mandatory step to take before migrating to S/4HANA because it converts customers and vendors into Business Partners. The CVI component ensures the synchronisation between the Business Partner object and the Customer/Vendor objects.

Archiving of customer / vendors is not a simple task. It requires all related transactional data of customers and vendors – such as sales order, purchase orders and many other financial data – to be properly closed, completed, and then archived. Forcing archiving without a way to access potentially useful information is tricky and we can help with it.

TJC Group can help you improve the reliability of Customer/Vendor Integrations prior to S/4HANA migration in 3 easy steps:

- Action point before CVI in the source data (SAP ECC).

- Archive or deletion of unused customers and vendors to improve the reliability of CVI.

- Archive also the transactional data of those customers and vendors providing access to this information for future use with ELSA by TJC Group.

- Keep access to cutoff information before and after the migration to answer potential auditors’ requests, both from a financial and business point of view.

To archive the customers and vendors stated in point 1, the transactional data for those customers and vendors must first be archived and/or deleted too. Archiving or deleting customer and vendor data which is no longer in use is a major factor in terms of regulatory compliance (e.g. GDPR in Europe or Loi 25 in Quebec).

This is managed with SAP ILM standard tool. On top of that, the whole data archiving and data deletion process can be automated with the Archiving Sessions Cockpit (ASC) by TJC Group, streamlining the entire process. The ASC is the only SAP-Certified solution in existence that is truly capable of automating the data archiving and data deletion phase prior to system migration.

5.7 Migrating data archiving processes from SAP Legacy systems to SAP S/4HANA

Access to data in SAP S/4HANA is completely different to the way it was in SAP ECC. Fiori apps in S/4HANA allow access to data using oDATA services, however they do not retrieve archived data. During the migration, we recommend addressing the following two questions:

QUESTION 1: What should I do with existing legacy system archiving: stop it or keep it alive?

If you do not decommission legacy system and if you have SAP ILM in place for data privacy reasons, then keeping the existing process alive is a must. Still, data archiving is a manual process that never sleeps. That is why automation with the Archiving Sessions Cockpit brings a huge advantage.

QUESTION 2: What should you do with S/4HANA archiving?

Planning an early housekeeping and technical archiving project will most likely be beneficial. Keep investigating memory volumes and the performance of key tables performances (such as ACDOCA table) and anticipate when a more complete archiving project should take place.

6. Strategies to optimise data volumes in SAP S/HANA

6.1 Data Archiving in SAP S/4HANA

The reasons to perform data archiving in S/4HANA follow the same logic as Data Archiving in SAP ECC. Classic Data Archiving plays an important role in SAP S/4HANA and limiting the size and growth of HANA database. Running out of HANA memory can lead to complex upgrades and unexpected costs. Organizations embarking on an SAP S/4 journey need to create a robust data archiving strategy to effectively optimise their data and ensure compliance with data privacy laws.

There are several differences between data archiving SAP ECC and in SAP S/4HANA:

- Fiori Apps that access S/4HANA data using oDATA services do not retrieve archived data. SAP Fiori is a user interface for SAP applications that is designed to be easy to use and responsive on any device. It provides several standard archiving functions, such as the ability to analyse archiving variant distribution, the possibility to monitor archiving jobs and the option to destroy ILM data.

- There are several archiving transactions that are not available in SAP Fiori. These transactions are used to perform various archiving tasks which can still be accessed via the old SAP GUI framework. However, from a practical point of view, only those transactions that have been “fiorised” will display archive data access.

- See below an example of a Fiorised application (based on the old GUI display) for an archived invoice:

- Some heavy memory-consuming tables in SAP S/4HANA can be reduced through housekeeping (including some important log tables), data archiving and selected archiving. This is the case of the tables SOFFCONT12, SOC3N, CDPOS, DBTABLOG, BALDTA and EDID4.

- The SAP HANA database supports two types of tables: Rows tables and Columns tables. Each table is loaded on its own “store” and, hence, they’re managed differently. Row tables are loaded into memory permanently; therefore, you’d want to keep its size as small as possible. Data archiving makes total sense to dramatically decrease the data volume on the system without creating issues when accessing the data.

With data archiving, the data is removed from the database, preventing data from rising into memory, and stored as an archive file to which the system has read-only access. The archive files are stored in a much cheaper environment. In a nutshell, data archiving provides a cost-effective way of managing data in S/4HANA.

6.2 SAP HANA Native Storage Extension (NSE)

SAP HANA Native Storage Extension or NSE is a native solution proposed by SAP to manage warm data store in SAP HANA. It facilitates the management of less-frequently accessed data without fully loading it into memory. NSE adds a seamlessly integrated disk- based processing extension to SAP HANA’s in-memory column store by offering a large spectrum of data sizes at low TCO.

NSE is based on the temperature of the data; it classifies data depending on how frequently it is accessed at the query level for each column. As such, the NSE advisor will categorise the table’s data into 3 different temperature tiers – Hot, Warm, and Cold- each of which is loaded/unload (or not) into memory in a different way in a dedicated buffer cache (SAP note 2799997). The Database Administrator can decide, after analysis, to put in place NSE for a table, an individual column, or a table partition. A positive point about NSE is that it can be easily undone, if needed.

With NSE, it’s possible to expand the data capacity of an SAP HANA database without needing to upgrade the existing hardware. This means that with the same amount of memory, you can store more data, and with the same amount of data, you can use less memory.

The capacity of a standard HANA database is equal to the amount of hot data in memory. However, the capacity of a HANA database with NSE is the amount of hot data in memory plus the amount of warm data on disk.

The main memory (Hot data) recommended ratio with NSE (Warm data) is 1:4 that means if total HANA in-memory size is 2TB then Warma data can be 8TB swapped in and out of buffer cache. Furthermore, although the size of the buffer cache with respect to warm data size varies from application to application, as a starting point the ratio of the buffer cache to the warm data on disk should not to be smaller than 1 to 8.

For example (see below), if you have 8 GB of warm storage, you should allocate at least 1 GB of buffer cache. If your frequently accessed NSE data set is small, you can experiment with a larger ratio of warm storage to buffer cache.

Takeaways about SAP HANA NSE

- NSE is designed to reduce memory usage for specific columns, partitions, and tables by not requiring those columns to be fully memory-resident.

- NSE is reversible, any changes can be easily undone.

- The effects of NSE on performance need to be tested carefully. The performance impact has to be validated before production use as there is an expected decrease in performance.

- Keep in mind that NSE is not a replacement for data archiving or data destruction because:

- it does not help to meet data privacy requirements

- it does not reduce the HANA disk size

- SAP HANA NSE offers native support for any SAP HANA application. However, the current applications do not inherently utilize its capabilities. Therefore, it is down to Managers and Developers to make the right adjustments on the database configuration order to take advantage of the NSE feature, for example, by specifying a load unit in configuration parameters when creating or altering database tables.

- NSE is the recommended SAP HANA feature to be used for data tiering of fast growing tables in Smart AFI (Smart Accounting for Financial Instruments) and FPSL (Financial Products Subledger – Banking Edition) / FPG (Financial Posting Gateway).

Getting started with NSE

The NSE Advisor will provide recommendations about load units for tables, partitions, or columns according to how frequently they are accessed. To get started with NSE, we highly recommend you go through the SAP HANA Cloud, SAP HANA Database Administration Guide.

6.3 Partitioning of column tables

The SAP HANA database’s partitioning feature divides column-store tables into separate sub-tables or partitions. This makes it possible to broke down the large tables to into smaller and more manageable tables that will allow for memory usage optimisation. Table partitioning is only available for column tables. Therefore, row tables cannot be partitioned.

About the Delta table

Column tables are loaded on demand. However, when a table column is updated, this update is written on a temporary table, called a delta table. That means that double memory is needed for the load. That is why, sometimes it makes all the sense to split tables into smaller pieces, so that less data is loaded into memory.

What is the best “criteria” to partition column tables?

The heart of the matter relies on the criteria or “key” defined to split the table. When the user’s queries align with the specified key, it becomes feasible to identify the exact partitions containing the queried data and to avoid loading unnecessary partitions into memory. Conversely, if the query is based on over-selection, such as “document type”, all partitions will be loaded into memory.

When a table is partitioned, the split is done in such a way that each partition contains a different set of rows of the table. There are several alternatives available for specifying how the rows are assigned to the partitions of a table, for example, hash partitioning, round-robin partitioning, or partitioning by range. You can find out more about table partitioning in SAP S/4HANA in SAP Learning portal – performing table partitioning.

6.4 Regular housekeeping

Finally, regular maintenance operations are vital for row tables to prevent data inflation, ensuring efficient database management. Housekeeping is important for maintaining good system performance, keeping costs in check. Housekeeping activities includes many routine chores and little tasks under the Database manager’s agenda such as cleaning old data, cleaning tables, removing duplicate or incorrect data records, deletion of temporary technical data e.g. spool, jobs, application logs, etc.

7. Legal compliance, data privacy and data archiving

7.1 An overview of legal and regulatory compliance

In simple terms, legal and regulatory compliance is a continuous process of adhering to the laws, regulations, and policies that governs the activities of an organisation. It has a proactive approach to help identify, understand, and implement the requirements to comply with regulation, as any citizen or enterprise should, and to further mitigate legal risks. Not just that, legal and regulatory compliance also establishes a trust with stakeholders and enables organisations to operate ethically with the larger business landscape.

As a matter of fact, failing to comply with the legal requirements may result in heavy penalties not just for the organisation but also for the individual at fault. Corporations cannot take legal compliance casually because breaching its rules can be costly. Additionally, if legal compliance requirements are not met, organisations will face internal or regulatory disciplinary actions, civil liability, damaged corporate reputation, and loss of goodwill.

7.2 An overview of data privacy

Overall, data privacy is managing crucial personal data, touted as “Personally Identifiable Information (PII)” and “Personal Health Information (PHI)”. The information under these includes financial data, health records, social security numbers, and much more.

In business context, data privacy goes beyond the PII and PHI of your employees, suppliers and customers. There are several other factors that makes the way here like, company data, confidential agreements, business strategies, and so on. In fact, data privacy in business concerns any information that helps organisations with their smooth operation.

The importance of data protection and privacy cannot be stressed enough. As a matter of fact, data privacy is quintessentially important for legal and regulatory compliance. There are several data protection laws like the GDPR in the EU, DPDP in India, Law 25 (Loi 25 for French speaking persons) in Quebec, Canada, etc., in place to ensure privacy of the information collected by the corporations. Apart from this, data privacy is also important for –

- Protection of information

- Preserving individual autonomy

- Ethical data practices

- Ensuring trust and confidence amongst your customers, stakeholders, and employees

- Enabling innovation that are driven by data

7.3 How SAP data archiving plays a role?

Personal data is all around us – whether you check the record in the HR systems or supply chain management. Look at the data scattered in several SAP modules – there are multiple documents and tables involved, there are data in the organisation’s CRM as well. Are they all just basic data or goes ahead of it? You would be surprised to know that personal data can be found in all sorts of documents. As a matter of fact, the invoices generated by companies, your payslips, emails, contracts, and so on – they all may contain personal data (your name, age, security numbers, etc.). But do you know that these collective pieces of information cannot be stored in SAP systems without a purpose?

As it stands, applying data privacy into SAP systems can be a tough job; however, the following steps can ease your process –

Step 1: The first step is as simple as it gets. It is to identify the personal data, why it was collected, and where it is stored in your system.

Step 2: Once you have identified where it is stored, the next step is to define data retention rules. Additionally, you also have to define rules for blocking and deletion after the defined periods. Having said that, retention periods are determined as per the purpose for which the personal data was collected. The purposes can vary from order management, application management to invoice management, and so on.

Step 3: After the objectives mentioned in the previous step are achieved, SAP ILM Retention Management (an evolution of SAP data archiving technology) comes into the picture. It is paramount to ensure that all the personal data in your SAP systems are archived, deleted, and sometimes, anonymised. Now, there might be sequences when some data might be needed for future audit purposes; therefore, data archiving is a must! It will not just help you retain the needed information but also ensure legal and regulatory compliance.

Bear in mind that there is no single solution to handle data privacy in SAP systems; rather, it is a combination of solutions and tools. A key solution, however, is the SAP Information Lifecycle Management Retention Management (SAP ILM RM) & SAP ILM feature called as Blocking & deletion for master data (customer, vendors, Business partners) provided by SAP. This solution allows SAP users to define their data retention policies and destruction policies at the end of the retention period. Apart from this, for SAP data archiving process, there are tools like our very own Archiving Sessions Cockpit, which not only smoothens the process but also automates it, providing more effective results.

8. Automating data archiving and data deletion with the Archiving Sessions Cockpit

8.1 The need for automated SAP data archiving solutions

We have given you insights on data archiving, its importance, benefits, and more. Now it is only common to wonder if there are quick and effective solutions to get your data archived. Moreover, are they automated?

Data Archiving is a recurrent manual job that requires a series of jobs to be reviewed and executed on a regular base. This is when Automation comes into place. Having automated data archiving solutions is imperative as it helps organisations ensure lower TCOs and accurate data compliance in SAP systems. For businesses, it is an absolute need to cut down the cost and migration time by removing obsolete data. With automated archiving software, not only can corporations manage long-term TCOs but also achieve time-effective migration. In fact, it can mitigate non-compliance risks as well.

“Trying to do data archiving without that is almost like trying to put somebody on a hamster wheel. It’s hard work. Automating the process with the Archiving Sessions Cockpit (ASC) by TJC Group makes it much easier. It’s an automated process so once you get it up and running, it’s there”.

Mark Holmes, BT Group

Furthermore, automated software for archiving offers financial benefits by saving on storage costs, maintenance costs, and software licensing costs. Most importantly, opting for a SAP certified automated data archiving software also ensures the security of your archived data. Archiving locks your data against time, keeping it away from being accessible by any other applications. It is a highly secure way of managing long-term data retention requirements for audit compliance. Archived data is secure, it cannot be hacked, and it is automatically retained for as long as legally required.

8.2 Archiving Sessions Cockpit | Your go-to software for SAP data archiving

Introducing, the Archiving Sessions Cockpit (ASC) – a data archiving software that will help you optimise and automate the entire archiving and/or data destruction process. A brainchild of TJC Group, our archiving software manages and controls your archiving runs from one single point. The ASC is an SAP-Certified tool and, therefore, based on SAP ILM an applies SAP standard principles.

The Archiving Sessions Cockpit automates the full data archiving and ILM process, from creation to deletion of archiving files, allowing to complete tasks that would otherwise require manual intervention.

Data archiving or data destruction is a recurrent manual job that requires a series of jobs to be reviewed and executed on a regular base. That is when Automation comes into place. The ASC assures that all the recurring processes of SAP ILM are inculcated and completed to perfection. With its automation capabilities, it helps free up your internal resources for more competent tasks directed to bring in greater returns on investments in the future. The process in SAP systems using ASC will provide massive savings on database storage costs when migrating to S/4HANA, keeping data growth under control after the move and ensures that processes for privacy policies are executed as planned.

Main benefits of the Archiving Sessions Cockpit for SAP Admins / SAP Managers

- Keep database under control by archiving data that is rarely accessed

- Achieve massive savings on database storage costs both in SAP ECC and SAP S/4HANA.

- When on SAP S/4HANA, avoid scaling up your HANA memory needs to a higher T-shirt tier.

- Prevent manual errors and recurring efforts due to the lack of time

- Automate archiving sessions that can run without user-input as per predefined parameters

- Ensure that company data is fully traceable to comply with Tax/GDPR requirements

- Reduce migration times to move to S/4HANA

Main benefits of the Archiving Sessions Cockpit for SAP Consultants and Project Managers

- Decrease in the TCO of SAP by making the right-size move to S/4HANA

- Achieve long-term savings with the automation of data archiving

- Define clear goals for data volume reduction targets

- Scalable projects based on evolving needs. Possibility to adapt projects to mergers/ divestments or changes in tax

Main benefits for business Users (Accounting / Tax)

- Quick access to archived data through via regular SAP transactions.

- Traceability logs to remain available to be compliant with tax and audit regulations.

8.3 The development of the Archiving Sessions Cockpit

To you and me, SAP data archiving may just be a method of freeing up storage capacity; for businesses, it is the best way to reduce TCOs, comply with regulatory requisites, ensure system security and data privacy, and much more! However, even with data archiving tools, it ain’t a leisurely walk.

The fact of the matter is that organisations were tired of restarting a new archiving project every year. Moreover, the large internal data volumes and the risk of data loss due to mismanagement in processes are additional concerns for the organisations. Multiple customers requested a solution that would help automate the archiving process. So naturally, there had to be a smoother and better route to ensuring that customers experience a seamless data archiving process. And thus, the development of the Archiving Sessions Cockpit began. With ASC, organisations can bid farewell to complex and cumbersome manual data archiving, delays in new hardware investments, and more. It helps increase the response time of your SAP systems, thereby keeping the system users happy.

8.4 Functions of the Archiving Sessions Cockpit

Here is what you will get with our ASC software –

- ASC is designed to automate mass data archiving and destruction, which includes initial, and regular processes.

- It helps schedule archiving sessions coordinated with the IT schedules and business calendars.

- ASC manages all necessary jobs till sessions completion.

- It automatically recovers and restarts the sessions in case of any disruptions.

- ASC can manage detailed variants, which allows fine archiving and destruction granularity.

- It maintains traceability logs for users.

- The ASC is able to manage additional steps such as customer specific pre and post processing, the clearing of SAP Archive information structures and ADK files backup.

Learn more about automating Data Archiving withs the ASC in this Whitepaper:

https://www.tjc-group.com/resource/archiving-sessions-cockpit-asc-whitepaper/

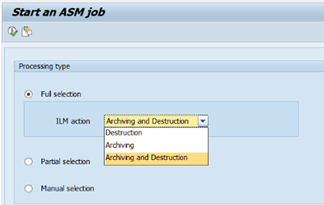

8.5 The Archiving Sessions Cockpit for data deletion

For data destruction to be possible, the ILM Business Function must be activated in the SAP system and necessary ILM customizing done. Then, SAP ILM can be used to archive or destroy data. In the ASC, we can define rules for data archiving workflows after X years, and separate rules for destroying the remaining workflows after X years.

In the ASC, objects are classified into 3 groups:

- Archiving objects, that will only be capable of archiving data.

- ILM objects, that will be able to do both, archive and delete data

- Data Destruction objects, that will only be able to delete data. These are composed of ILM HR objects and data destruction objects.

If the ILM business function is not activated, the ASC will only suggest working on a group of objects which includes archiving and ILM objects. but not data destruction objects.

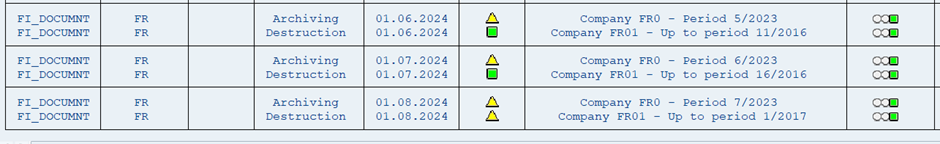

On the Archiving Sessions Management dashboard, we’ll be able to customise the different jobs, either for data archiving or data destruction, and launch them all.

See below an example of classical data archiving jobs as well as data destruction jobs with the ASC:

8.6 Advantages of the Archiving Sessions Cockpit

With ASC, you get the following advantages –

- Our Archiving Sessions Cockpit entirely manages your database growth.

- It helps enhance the technical process of SAP data archiving and data destruction.

- Since the entire process is automated, there are no manual hiccups, ensuring an error-free process.

- It helps retain the gains that you achieve from the archiving project while facilitating regular sessions to maintain the ROI.

- Since ASC schedules the sessions as per the business calendars, there is no interruption in your business operations during the archiving runs.

- Since ASC schedules the sessions as per the business calendars, there is no interruption in your business operations during the archiving or destruction runs.

8.7 Archiving Sessions Cockpit versus Jobs Scheduler

To execute one SAP job, jobs scheduler tools require a program and a variant. The latter can be created automatically using variant variables, but this functionality quickly reaches its limits as soon as the objects to be archived are not selected by date, but by fiscal period, by residence time or with number ranges. The variant must then be manually adapted before it is used. On the other hand, the creation of variants via variant variables uses the current date, which means that the variants required for a delayed archiving that has been stopped for some time cannot be carried out. The logic of the ASC is that it determines at a given time all sessions to be launched for an object and processes them one after the other, which makes it possible to temporarily suspend the execution of certain ILM objects and restart them later without forgetting any session. The variants required for each job are created dynamically, regardless of the selection criteria they use. They are created in such a way as to select only the data that must be archived or destroyed at time T, which restricts the amount of data to be processed and drastically reduces execution times.

An archiving session consists of several jobs, some of which (storage and deletion jobs) are not known when the session is started. With a job scheduler, it is then necessary to define in the object customizing that these jobs will be launched automatically by ADK. But this does not allow you to control the number of jobs that are running simultaneously, which can cause performance issues at the database level. Furthermore, in the event that a job is interrupted, it will be necessary to restart it manually. With ASC, these jobs are launched according to the defined rules (time slots, number of restarts), which ensures that each archiving session will be fully completed.

Some ILM objects must be processed in a defined sequence, i.e. the session for object A only starts if the session for object B is complete. This type of control cannot be performed with jobs scheduler. It therefore requires manual intervention to launch object A after ensuring that object B has been successfully processed.

However, it is possible to launch the ASC job via jobs scheduler. The ASC job will generate the jobs required for each archiving or destruction session. If necessary, job interception can be enabled in the system so that each job created by the ASC is known by the jobs scheduler. The latter will not have to take any action in the event of a job interruption, since this interruption will be managed directly by ASC.

9. Strategy and Planning

9.1 Developing a Comprehensive SAP Data Archiving Strategy

As an IT professional, you’re probably challenged with the stringent need to keep SAP systems highly operational, retaining vast amounts of data, at the lowest cost possible. Piece of cake, right? The exponential growth of data in SAP environments leads to performance bottlenecks, high storage costs, and compliance risks. Can you relate to this? A comprehensive SAP data archiving strategy provides a cost-effective solution to these competing demands, with a clear return on investment and solid results within months.

TJC Group started its operations over 25 years ago providing SAP Data Archiving services and official data archiving training for SAP France. Over the years, we’ve acquired an unparalleled competence in this field worldwide. Our proven methodology is the result of delivering hundreds of projects to hundreds of SAP customers worldwide. We are able to automate the execution of data archiving and ILM with the Archiving Sessions Cockpit, a SAP-certified solution based on SAP standards. The Archiving Sessions Cockpit has turned up to be an absolute game-chaining factor for efficiency gains.

9.2 SAP data archiving framework

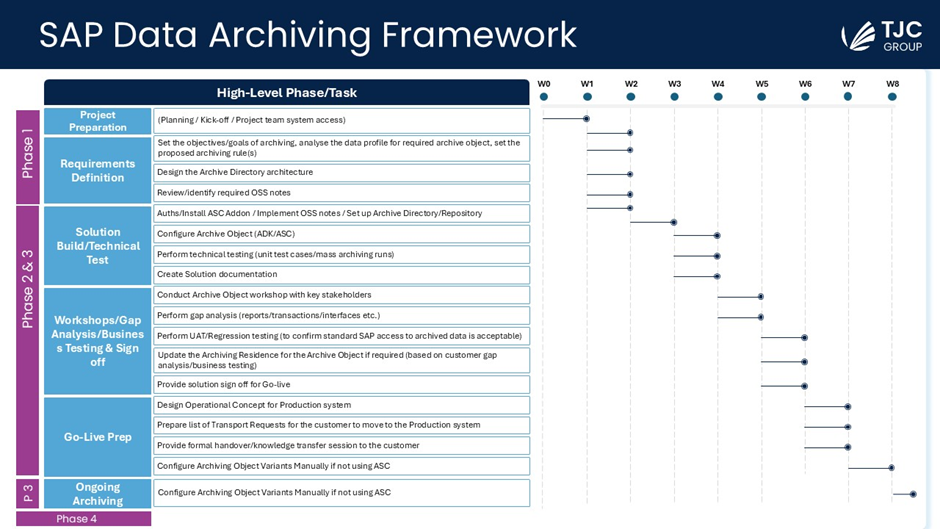

A classical SAP Data Archiving framework includes 4 phases – the last two are recommended but optional. These are the following:

- Phase 1: Analysis and Project preparation

- Phase 2. Implementation phase

- Phase 3 (optional): Ongoing Archiving Services (BPO)

- Phase 4 (optional): Archiving Strategy Review

Let’s see them all one by one.

Phase 1: Technical analysis and preparation of the project

Technical Analysis

The first step along the journey starts with a deep technical analysis to gather the necessary information, analyse the quick-wins and define the scope of the project.

- Identification of the system concerned: Database (type and version), version and support page, code page (Ascii, UTF-8, UTF-16), modules used, among other.

- Analysis of the tables’ volumes and identification of the largest tables

- Identification of the archiving objects

- Definition of the residence and retention period for each object

- Calculation of the volume reduction

The initial analysis will answer the following questions:

- What are the risks/constraints/legal requirements/retention requirements?

- What are the objects to be archived as a priority?

- Can any data be safely deleted to produce savings?

- What would be the volume gains, and which tables of performance improvements are predictable?

- How should the project be managed (tools, method and planning)?

Interviews with key users

Involving the customer’s key users early in the process ensures that the IT project not only meets technical objectives but also delivers meaningful improvements to the business. In this preparatory phase, we will typically interview key users from IT, Finance and Tax departments, who will actively participate in the discussion and definition of the project.

Here’s why it is essential to have such initial talks:

- Understand the organisation’s business processes. Key users have hands-on experience with day-to-day operations and can provide a deep understanding of business processes that may not be documented formally.

- Capture the project requirements accurately. IT projects often fail due to poorly captured or misunderstood requirements. Key users can articulate their specific needs, pain points, and expectations, ensuring the project scope aligns with actual business needs

- Identify the pain points and gaps between teams. The challenges faced by SAP users in IT, Tax and Finance might be different. Their input can help identify gaps between the different teams that might not be obvious from a technical standpoint alone. Listening to their voices helps us identify misalignments to bridge the gap, ensuring our proposal is aligned with everyone’s expectations.

- Validate Assumptions. The discussion with key users help validate or challenge these assumptions, ensuring that the project is based on real user behaviour and needs.

An ongoing knowledge transfer is continuously done throughout the project to upskill the customer’s team. During the interviews, we’ll also discuss about the organisations plans for future implementations (for example: SAP S/4HANA Migration) or country roll outs.

Recommendations and Report

Based on the findings, TJC Group data archiving team will craft a comprehensive archiving strategy and a step-by-step action plan to meet the needs identified. The plan will include the key metrics, measures and deliverables as well as the ROI of the archiving project. Before the project kick-off, a detailed report is handed over to the client with a suite of recommendations and volume data reduction targets.

This is a list of the main activities (though not limited to) that will be carried out:

- Define an accurate data archiving scope.

- Identify archiving objects to be archived,

- Analyse the retrieval of archive data solutions regarding online & archived data

- Improve performance of the SAP system

- Identify workflows for an archiving strategy

- Risk analysis regarding archiving (Tax, data, document)

Implementation phase

This is a hands-on approach to deploy the archiving project, making sure the archiving activities are working properly, that pre-requisites are met and an archiving ratio of 95% or more is achieved. We also ensure the archive files are generated in way that it allows for future deletion and /or access when needed.

In case the projects span into a data privacy or SAP ILM project, in this phase we will also guarantee the ILM processes run smoothly, achieving an archiving ratio of 100% when required. Also, the prerequisites such as blocking, IRF and retention rules are mapped and maintained, using the Archiving Sessions Cockpit for ILM.

This phase has 5 steps:

STEP 1 – Solution Build and Technical Test

- Kick -off with client key stakeholders and TJC Group implementation team.

- Auths/Install ASC Addon / Implement OSS notes / Set up Archive Directory/Repository.

- Configure Archive Objects (ADK/ASC).

- Perform technical testing (unit test cases/mass archiving runs).

- Create solution documentation.

STEP 2 – Workshops

- Conduct Archive Object workshop with key stakeholders in the IT, Finance and Tax departments and any other relevant teams.

- Gather requirements and documentation available.

STEP 3 – Gap Analysis/Business Testing & Sign off

- Perform gap analysis (reports/transactions/interfaces etc.).